Using your MySQL Database from GlobiFlow

With a little help from ProcFu, you can work with your MySQL database using GlobiFlow in a super-simple way.

Here’s how…

Get a Database

We covered this in the previous blog post and are assuming you have an externally usable database connected to ProcFu. If you do not, go over part 1 first.

Make some Tables

To administer your database, you should just use Adminer by clicking on the database name on the ProcFu configuration page where you added the connection.

For the sake of examples in this post, I have just created a simple table called “mytable”

CREATE TABLE `mytable` (

`id` int(10) unsigned NOT NULL AUTO_INCREMENT,

`title` varchar(255) NOT NULL,

`status` varchar(10) NOT NULL,

PRIMARY KEY (`id`),

KEY `status` (`status`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;ProcFu Functions

ProcFu currently has 3 scripts to access MySQL. Which one to use depends on what you’re trying to get back:

mysql_command.pf- used for commands with no return, like INSERT, UPDATE, DELETE, etc. This script will always come back with “OK” (unless an error has occured).

mysql_query.pf- used for queries that return a row. These are queries like SELECT SUM or SELECT COUNT etc, or SELECT that guarantees only one row like SELECT * LIMIT 1

mysql_array.pf- used for queries that can return multiple rows, like SELECT * etc

To cater for sanitization of input parameters, PF uses question marks as placeholders. Why? Because we NEVER want to insert an un-validated string into a SQL expression. We let MySQL sanitize each parameter for us to keep us and our data safe.

Here are some common examples

- SQL

INSERT INTO mytable (title, status) VALUES (?, ?)with params["new item", "foo"]

- SQL

UPDATE mytable SET status=? WHERE id=?with params["bar", 1]

- SQL

SELECT * FROM mytable WHERE status=?with params["bar"]

Using from GlobiFlow

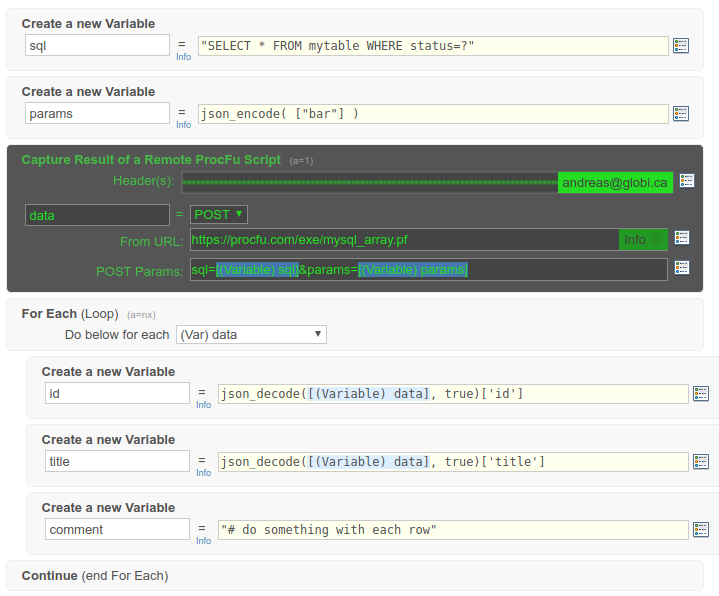

The best way to pass these values from GlobiFlow to ProcFu is to use variables. Create one variable for the sql statement, and one for the parameters in JSON.

For mysql_array queries you can then for-each the result and get each individual field with json_decode

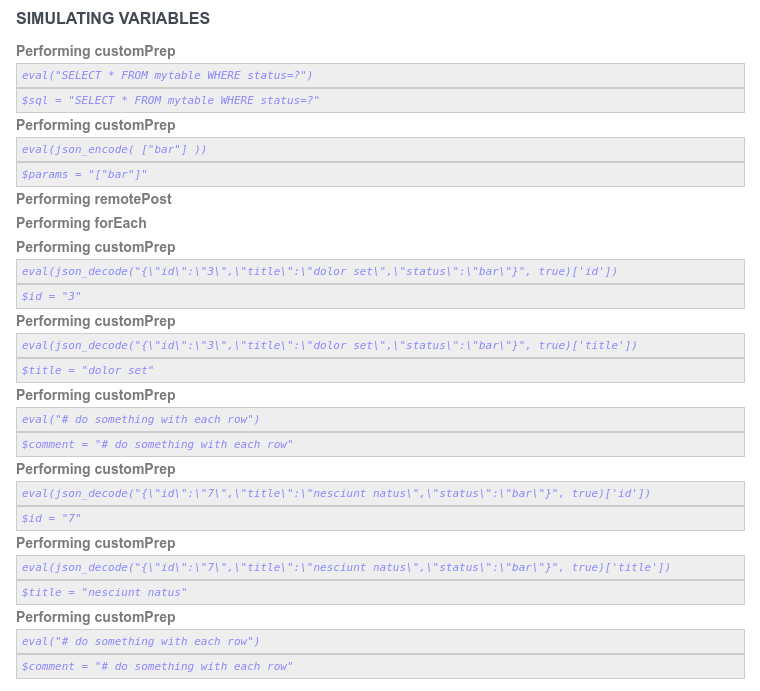

Here’s a simulation result for the above flow:

And this query took milliseconds instead of seconds, minutes, or in some cases, hours.

Don’t forget you always have phpMyAdmin still at your disposal to work with the data interactively, plus you can connect any other service to your data so that it can easily and quickly be shared with all your IT systems that need it, without API’s or special programming.

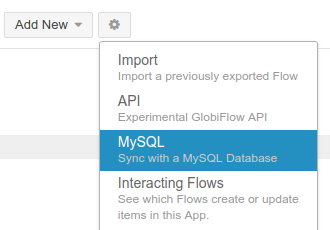

MySQL Sync

One awesome hack to query your Podio data really quickly is to set up a MySQL sync in GlobiFlow. That way any changes in Podio will be propagated to a MySQL table, and you can then use SQL SELECTs to query this data.

If you did a Search action in Podio using GlobiFlow, it would take a long time (depending on the size of your data), but using a MySQL SELECT query, would take only a split second.